With some effort, you may be able to use 8-bit integers to calculate a neural network prediction and still maintain the appropriate level of accuracy. Similarly, neural network predictions often don't require the precision of floating point calculations with 32-bit or even 16-bit numbers.

If it’s raining outside, you probably don’t need to know exactly how many droplets of water are falling per second - you just wonder whether it’s raining lightly or heavily. This enables us to reduce the total amount of memory and computing resources required to make useful predictions with our neural network models. As a first optimization, rather than executing all of these mathematical operations with ordinary 32-bit or 16-bit floating point operations on CPUs or GPUs, we apply a technique called quantization that allows us to work with integer operations instead. In total, this is a massive amount of computation.

Every single prediction requires many steps of multiplying processed input data by a weight matrix and applying an activation function. (MLP: Multi Layer Perceptron, LSTM: Long Short-Term Memory, CNN: Convolutional Neural Network)Īs you can see in the table, the number of weights in each neural network varies from 5 million to 100 million. You can see the results in the table below. How many multiplication operations would you need at production scale? In July 2016, we surveyed six representative neural network applications across Google’s production services and summed up the total number of weights in each neural network architecture.

Even when working with much more complex neural network model architectures, multiplying matrices is often the most computationally intensive part of running a trained model. The outputs of this matrix multiplication are then processed further by an activation function. This sequence of multiplications and additions can be written as a matrix multiplication.

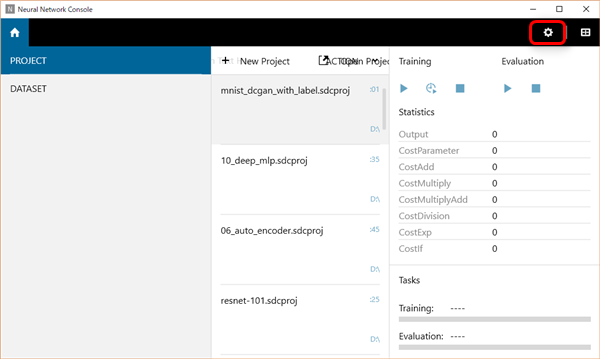

#Benchmark cpu gpu neural network training verification#

Considering we were hiring the team as we were building the chip, then hiring RTL (circuitry design) people and rushing to hire design verification people, it was hectic.Ī neural network takes input data, multiplies them with a weight matrix and applies an activation functionįor example, if you have three inputs and two neurons with a fully connected single-layer neural network, you have to execute six multiplications between the weights and inputs and add up the multiplications in two groups of three. We started shipping the first silicon with no bug fixes or mask changes. Norm Jouppi, the tech lead for the TPU project (also one of the principal architects of the MIPS processor) described the sprint this way: We did a very fast chip design. In the case of the TPU, however, we designed, verified, built and deployed the processor to our data centers in just 15 months. Usually, ASIC development takes several years. That’s when we realized that the fast-growing computational demands of neural networks could require us to double the number of data centers we operate. In this post, we’ll take an in-depth look at the technology inside the Google TPU and discuss how it delivers such outstanding performance.Īlthough Google considered building an Application-Specific Integrated Circuit (ASIC) for neural networks as early as 2006, the situation became urgent in 2013. These advantages help many of Google’s services run state-of-the-art neural networks at scale and at an affordable cost. In short, we found that the TPU delivered 15–30X higher performance and 30–80X higher performance-per-watt than contemporary CPUs and GPUs. We announced the TPU last year and recently followed up with a detailed study of its performance and architecture. Google’s first Tensor Processing Unit (TPU) on a printed circuit board (left) TPUs deployed in a Google datacenter (right)

0 kommentar(er)

0 kommentar(er)